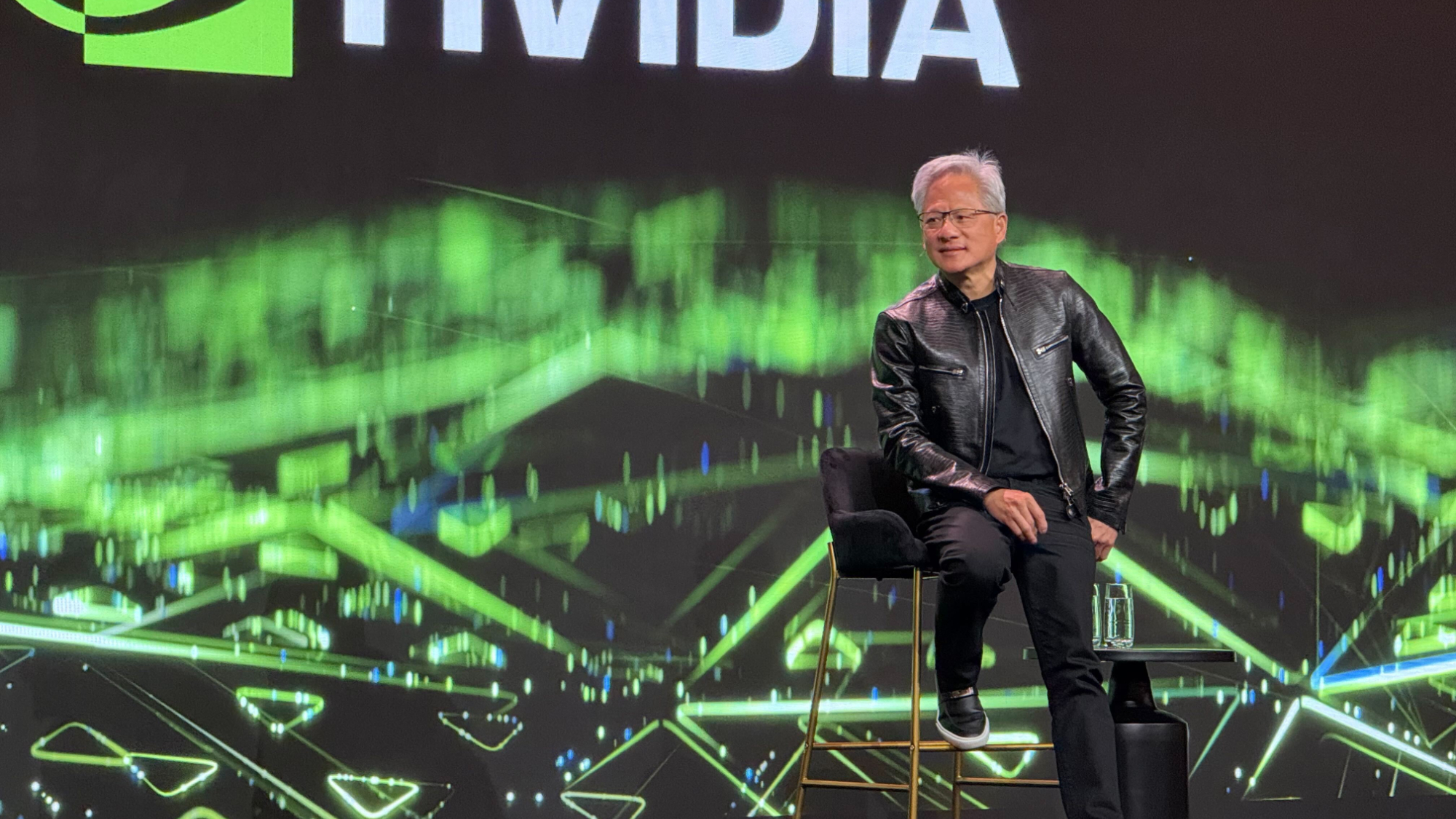

Nvidia CEO Jensen Huang explains why SRAM isn't here to eat HBM's lunch — high bandwidth memory offers more flexibility in AI deployments across a range of workloads

At CES, Jensen Huang was pressed on margins, memory costs, and whether Nvidia’s growing use of SRAM and open AI models might finally loosen the company’s grip on expensive HBM.

(Image credit: Tom's Hardware)

Tom's Hardware Premium Roadmaps

During a CES 2026 Q&A in Las Vegas, Nvidia CEO Jensen Huang was thrown a bit of a curveball. With SRAM-heavy accelerators, cheaper memory, and open weight AI models gaining traction, could Nvidia eventually ease its dependence on expensive HBM and the margins that come along with it?

Why SRAM looks attractive

Let’s take a step back for a moment and consider what Huang is getting at here. The industry, by and large, is actively searching for ways to make AI cheaper. SRAM accelerators, GDDR inference, and open weight models are all being pitched as pressure valves on Nvidia’s most expensive components, and Huang’s remarks are a reminder that while these ideas work in isolation, they collide with reality once they’re exposed to production-scale AI systems.

Huang did not dispute the performance advantages of SRAM-centric designs. In fact, he was explicit about their speed. "For some workloads, it could be insanely fast," he said, noting that SRAM access avoids the latency penalties of even the fastest external memory. "SRAM’s a lot faster than going off to even HBM memories."

This is why SRAM-heavy accelerators look so compelling in benchmarks and controlled demos. Designs that favor on-chip SRAM can deliver high throughput in constrained scenarios, but they run up against capacity limits in production AI workloads because SRAM cannot match the bandwidth-density balance provided by HBM, which is why most modern AI accelerators continue to pair compute with high-bandwidth DRAM packages.

But, Huang repeatedly returned to scale and variation as the breaking point. SRAM capacity simply does not grow fast enough to accommodate modern models once they leave the lab. Even within a single deployment, models can exceed on-chip memory as they add context length, routing logic, or additional modalities.

The moment a model spills beyond SRAM, the efficiency advantage collapses. At that point, the system either stalls or requires external memory, at which point the specialized design loses its edge. Huang’s argument was grounded in how production AI systems evolve after deployment. "If I keep everything on SRAM, then of course I don’t need HBM memory," he said, adding that "...the problem is the size of my model that I can keep inside these SRAMs is like 100 times smaller."