Nvidia's focus on rack-scale AI systems is a portent for the year to come — Rubin points the way forward for company, as data center business booms

Nvidia's CES keynote was notably not consumer-focused. Instead, the company detailed its booming data center business, with a deeper look at its NVL72 Rubin platform.

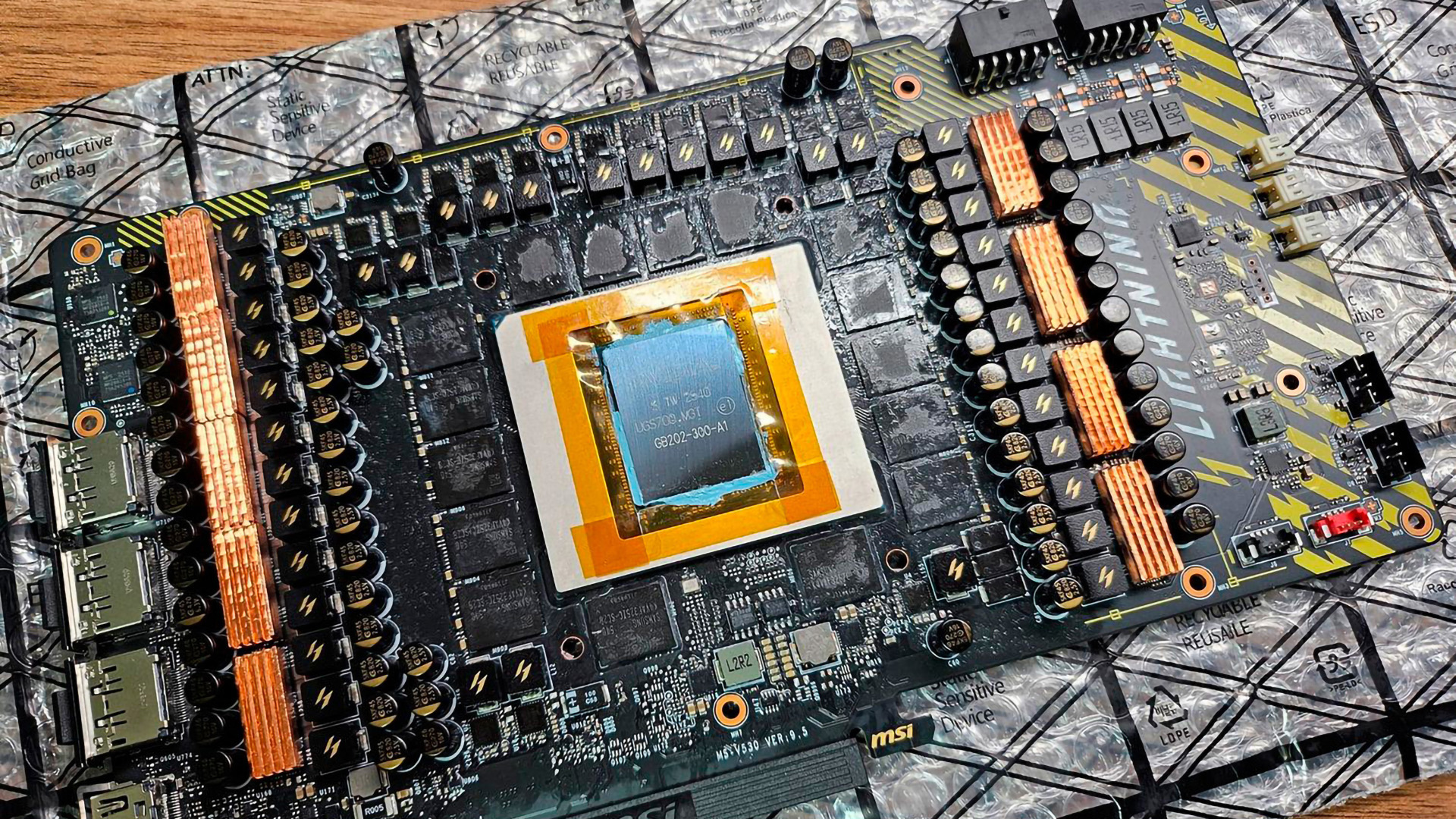

(Image credit: Tom's Hardware)

Tom's Hardware Premium Roadmaps

Those who tuned into Nvidia’s CES keynote on January 5 may have found themselves waiting for a familiar moment that never arrived. There was no GeForce reveal and no tease of the next RTX generation. For the first time in roughly five years, Nvidia stood on the CES stage without a new GPU announcement to anchor the show.

That absence was no accident. Rather than refresh its graphics lineup, Nvidia used CES 2026 to talk about the Vera Rubin platform and launch its flagship NVL72 AI supercomputer, both slated for production in the second half of 2026 — a reframing of what Nvidia now considers its core product. The company is no longer content to sell accelerators one card at a time; it is selling entire AI systems instead.

From GPUs to AI factories

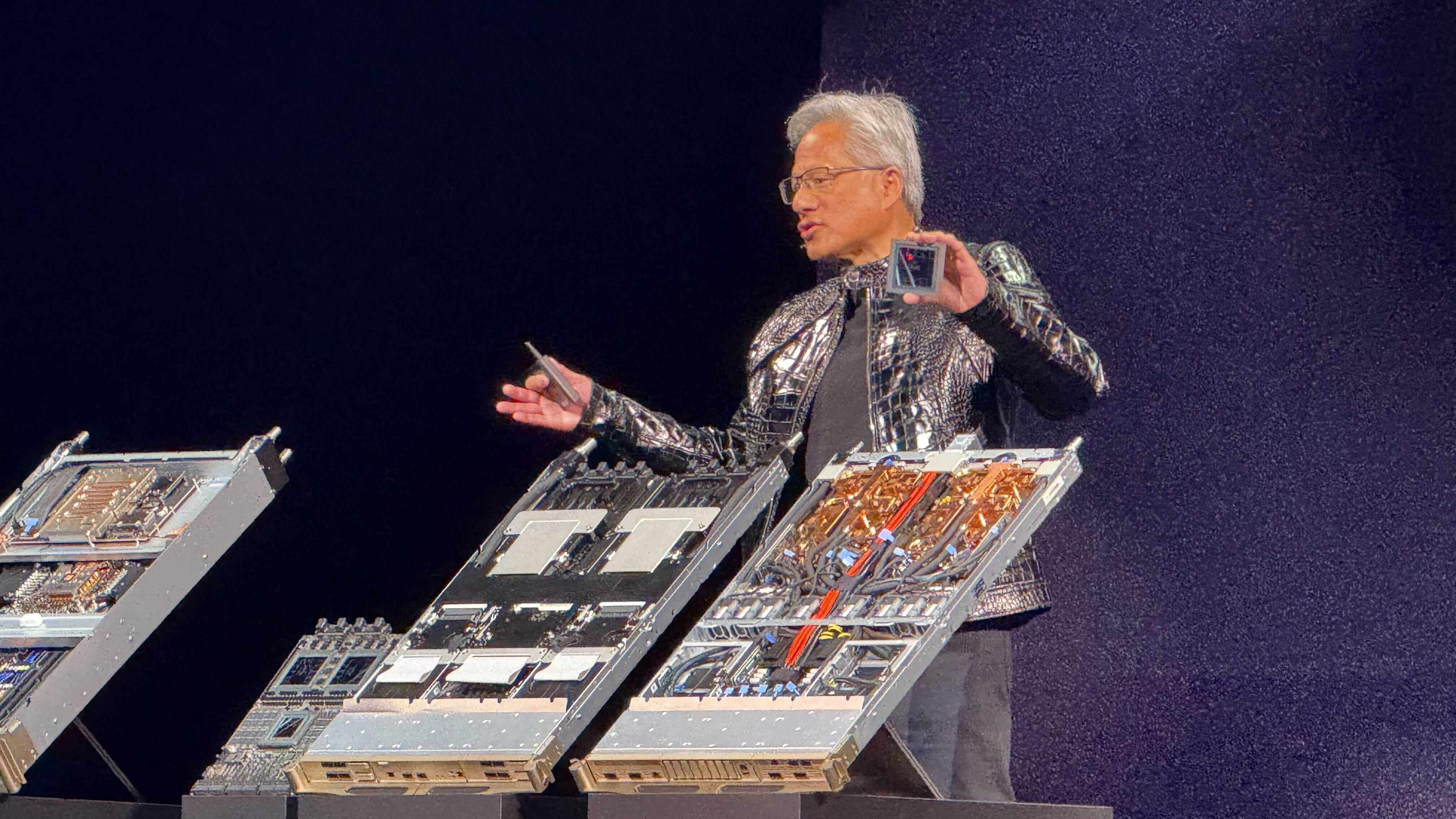

(Image credit: Nvidia/YouTube)

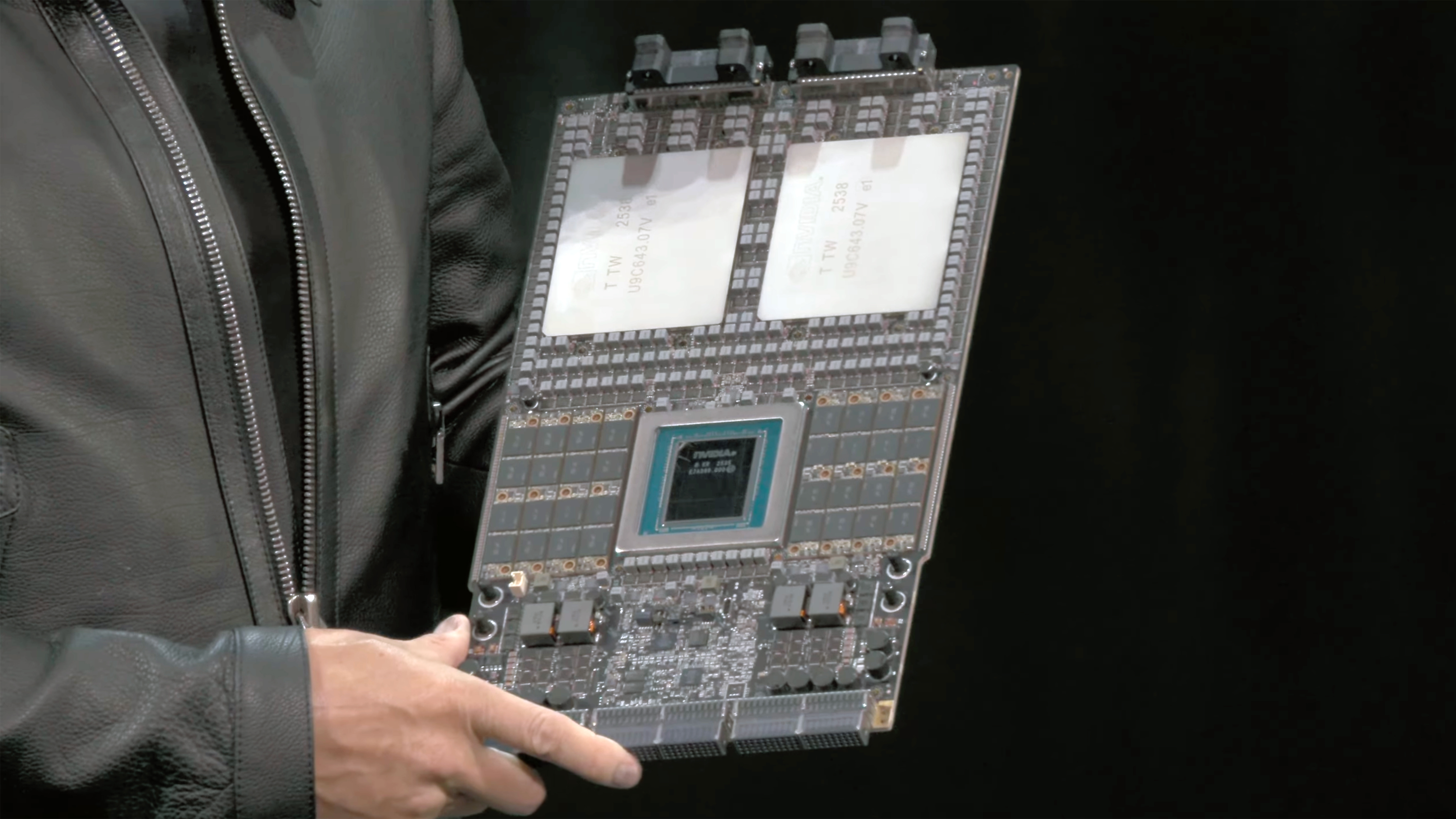

Vera Rubin is not being positioned as a conventional GPU generation, even though it includes a new GPU architecture. Nvidia describes it as a rack-scale computing platform built from multiple classes of silicon that are designed, validated, and deployed together. At its center are Rubin GPUs and Vera CPUs, joined by NVLink 6 interconnects, BlueField 4 DPUs, and Spectrum 6 Ethernet switches.

Each rack integrates 72 Rubin GPUs and 36 Vera CPUs into a single logical system. Nvidia says each Rubin GPU can deliver up to 50 PFLOPS of NVFP4 compute for AI inference using low-precision formats, roughly five times the throughput of its Blackwell predecessor in similar inference workloads. Memory capacity and bandwidth scale accordingly, with HBM4 pushing hundreds of gigabytes per GPU and aggregate rack bandwidth measured in the hundreds of terabytes per second.

These monolithic Vera Rubin systems are designed to reduce the cost of inference by an order of magnitude compared with Blackwell-based deployments. That claim rests on several pillars: higher utilization through tighter coupling, reduced communication overhead via NVLink 6, and architectural changes that target the realities of large language models rather than traditional HPC workloads.